Priority in MongoDB replica sets

I had interesting use case with a MongoDB replica set I'd like to share. When the replica set consists of four nodes and the Primary goes down, no new node is elected to become the new Primary. The replica set is in read only mode. So, what is going on here?

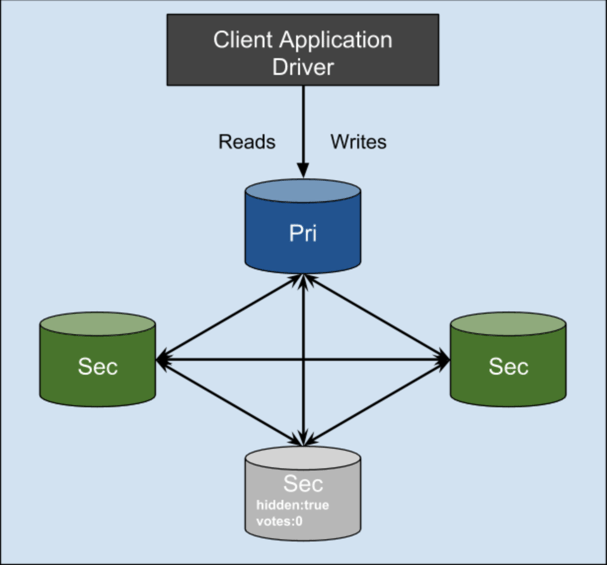

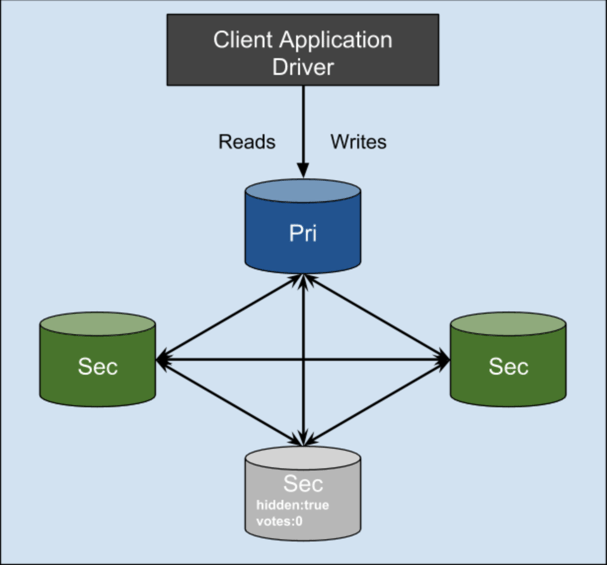

At first you may ask, why does the replica set have four nodes? Should there be an odd number of nodes, not even? Well, this is true, there should be an odd number of

voting

members in a replica set. In this case, there were three voting members and one hidden node, with votes:0. The setup may be seen in the diagram below: [caption id="attachment_104347" align="alignleft" width="607"]

MongoDB replica set[/caption]

If you wondering why the setup has a hidden node, it is only there for taking nightly back ups. Hidden nodes are not visible to the driver and the application will not send queries to these nodes. This setup is the same as the four node replica set from an application point of view.

For the purposes of this demo, I have created the same replica set on my localhost by spinning up four mongod processes running on a different port. The output of rs.status() is shown below: [code language="javascript"] replica1:PRIMARY> rs.status() { "set" : "replica1", "date" : ISODate("2018-05-28T11:22:25.154Z"), "myState" : 1, "term" : NumberLong(1), "heartbeatIntervalMillis" : NumberLong(2000), "members" : [ { "_id" : 0, "name" : "localhost:37017", "health" : 1, "state" : 1, "stateStr" : "PRIMARY", "uptime" : 127, "optime" : { "ts" : Timestamp(1527506444, 2), "t" : NumberLong(1) }, "optimeDate" : ISODate("2018-05-28T11:20:44Z"), "infoMessage" : "could not find member to sync from", "electionTime" : Timestamp(1527506444, 1), "electionDate" : ISODate("2018-05-28T11:20:44Z"), "configVersion" : 1, "self" : true }, { "_id" : 1, "name" : "localhost:37018", "health" : 1, "state" : 2, "stateStr" : "SECONDARY", "uptime" : 111, "optime" : { "ts" : Timestamp(1527506444, 2), "t" : NumberLong(1) }, "optimeDate" : ISODate("2018-05-28T11:20:44Z"), "lastHeartbeat" : ISODate("2018-05-28T11:22:24.521Z"), "lastHeartbeatRecv" : ISODate("2018-05-28T11:22:23.977Z"), "pingMs" : NumberLong(0), "syncingTo" : "localhost:37017", "configVersion" : 1 }, { "_id" : 2, "name" : "localhost:37019", "health" : 1, "state" : 2, "stateStr" : "SECONDARY", "uptime" : 111, "optime" : { "ts" : Timestamp(1527506444, 2), "t" : NumberLong(1) }, "optimeDate" : ISODate("2018-05-28T11:20:44Z"), "lastHeartbeat" : ISODate("2018-05-28T11:22:24.521Z"), "lastHeartbeatRecv" : ISODate("2018-05-28T11:22:24.070Z"), "pingMs" : NumberLong(0), "syncingTo" : "localhost:37017", "configVersion" : 1 }, { "_id" : 3, "name" : "localhost:37020", "health" : 1, "state" : 2, "stateStr" : "SECONDARY", "uptime" : 111, "optime" : { "ts" : Timestamp(1527506444, 2), "t" : NumberLong(1) }, "optimeDate" : ISODate("2018-05-28T11:20:44Z"), "lastHeartbeat" : ISODate("2018-05-28T11:22:24.521Z"), "lastHeartbeatRecv" : ISODate("2018-05-28T11:22:24.065Z"), "pingMs" : NumberLong(0), "syncingTo" : "localhost:37017", "configVersion" : 1 } ], "ok" : 1 } replica1:PRIMARY> [/code]

So, on first look everything seems to be fine. There is one Primary node and there are three Secondary nodes. We know that one of the secondaries is hidden and should have votes 0. Let’s take a closer look and the rs.conf() output. [code language="javascript"] replica1:PRIMARY> rs.conf() { "_id" : "replica1", "version" : 2, "protocolVersion" : NumberLong(1), "members" : [ { "_id" : 0, "host" : "localhost:37017", "arbiterOnly" : false, "buildIndexes" : true, "hidden" : false, "priority" : 1, "tags" : { }, "slaveDelay" : NumberLong(0), "votes" : 1 }, { "_id" : 1, "host" : "localhost:37018", "arbiterOnly" : false, "buildIndexes" : true, "hidden" : false, "priority" : 0, "tags" : { }, "slaveDelay" : NumberLong(0), "votes" : 1 }, { "_id" : 2, "host" : "localhost:37019", "arbiterOnly" : false, "buildIndexes" : true, "hidden" : false, "priority" : 0, "tags" : { }, "slaveDelay" : NumberLong(0), "votes" : 1 }, { "_id" : 3, "host" : "localhost:37020", "arbiterOnly" : false, "buildIndexes" : true, "hidden" : true, "priority" : 0, "tags" : { }, "slaveDelay" : NumberLong(0), "votes" : 0 } ], "settings" : { "chainingAllowed" : true, "heartbeatIntervalMillis" : 2000, "heartbeatTimeoutSecs" : 10, "electionTimeoutMillis" : 10000, "getLastErrorModes" : { }, "getLastErrorDefaults" : { "w" : 1, "wtimeout" : 0 }, "replicaSetId" : ObjectId("5b0be6019cb045df012e2601") } } replica1:PRIMARY> [/code]

The first three nodes have votes:1, hidden:false, but there is one additional option

priority

. As you may notice, only one node has priority:1, all other nodes are running with priority:0. What is priority in a replica set? From the MongoDB

documentation

, priority is a

number that indicates the relative eligibility of a member to become a primary. What does this mean in our case? Having the replica set running with priority:1 on only one node you'll end up with only that node being eligible to become the primary. If this node fails, no other node will initiate election because the priority is set to 0. Let’s demonstrate this by killing the process for the primary mongod running on port 37017. We will kill the process 7200 and wait the replica set to elect new primary. [code language="bash"] root@control:/opt/mongodb# ps aux | grep mongo root 7200 1.5 4.7 706048 97416 ? Sl 13:20 0:14 /opt/mongodb/bin/mongod -f /opt/mongodb/rs11.conf root 7216 1.4 2.9 688584 60516 ? Sl 13:20 0:13 /opt/mongodb/bin/mongod -f /opt/mongodb/rs12.conf root 7242 1.4 2.8 689608 58180 ? Sl 13:20 0:14 /opt/mongodb/bin/mongod -f /opt/mongodb/rs13.conf root 7268 1.4 2.7 689864 56208 ? Sl 13:20 0:13 /opt/mongodb/bin/mongod -f /opt/mongodb/rs14.conf root@control:/opt/mongodb# kill 7200 root@control:/opt/mongodb# ps aux | grep mongo root 7216 1.4 2.9 688584 60516 ? Sl 13:20 0:13 /opt/mongodb/bin/mongod -f /opt/mongodb/rs12.conf root 7242 1.4 2.8 689608 58180 ? Sl 13:20 0:14 /opt/mongodb/bin/mongod -f /opt/mongodb/rs13.conf root 7268 1.4 2.7 689864 56208 ? Sl 13:20 0:13 /opt/mongodb/bin/mongod -f /opt/mongodb/rs14.conf [/code]

Let's check the rs.status() output again. We are expecting the process running on port 37017 to be down, but all other processes are up. [code language="javascript"] replica1:SECONDARY> rs.status() { "set" : "replica1", "date" : ISODate("2018-05-28T11:40:42.038Z"), "myState" : 2, "term" : NumberLong(1), "heartbeatIntervalMillis" : NumberLong(2000), "members" : [ { "_id" : 0, "name" : "localhost:37017", "health" : 0, "state" : 8, "stateStr" : "(not reachable/healthy)", "uptime" : 0, "optime" : { "ts" : Timestamp(0, 0), "t" : NumberLong(-1) }, "optimeDate" : ISODate("1970-01-01T00:00:00Z"), "lastHeartbeat" : ISODate("2018-05-28T11:40:37.241Z"), "lastHeartbeatRecv" : ISODate("2018-05-28T11:39:20.134Z"), "pingMs" : NumberLong(0), "lastHeartbeatMessage" : "Connection refused", "configVersion" : -1 }, { "_id" : 1, "name" : "localhost:37018", "health" : 1, "state" : 2, "stateStr" : "SECONDARY", "uptime" : 1224, "optime" : { "ts" : Timestamp(1527506674, 1), "t" : NumberLong(1) }, "optimeDate" : ISODate("2018-05-28T11:24:34Z"), "infoMessage" : "could not find member to sync from", "configVersion" : 2, "self" : true }, { "_id" : 2, "name" : "localhost:37019", "health" : 1, "state" : 2, "stateStr" : "SECONDARY", "uptime" : 1206, "optime" : { "ts" : Timestamp(1527506674, 1), "t" : NumberLong(1) }, "optimeDate" : ISODate("2018-05-28T11:24:34Z"), "lastHeartbeat" : ISODate("2018-05-28T11:40:37.290Z"), "lastHeartbeatRecv" : ISODate("2018-05-28T11:40:37.264Z"), "pingMs" : NumberLong(0), "configVersion" : 2 }, { "_id" : 3, "name" : "localhost:37020", "health" : 1, "state" : 2, "stateStr" : "SECONDARY", "uptime" : 1206, "optime" : { "ts" : Timestamp(1527506674, 1), "t" : NumberLong(1) }, "optimeDate" : ISODate("2018-05-28T11:24:34Z"), "lastHeartbeat" : ISODate("2018-05-28T11:40:37.290Z"), "lastHeartbeatRecv" : ISODate("2018-05-28T11:40:37.289Z"), "pingMs" : NumberLong(4), "configVersion" : 2 } ], "ok" : 1 } replica1:SECONDARY> [/code]

The status of the node running on port 37017 is not reachable/healthy and no other node is elected as primary. You may expect the replica will elect a new primary as there are two other healthy secondary nodes (excluding the hidden node), but this is not the case. Having the secondary nodes with priority : 0 will force no other node to be eligible as primary. How to fix this?

We will have to reconfigure the replica set to have more than one node with priority greater than zero. I will leave this as your exercise (add your comment below if you need help), but will only mention that if we want to select a particular node to always be the primary, we can set the priority to ten and have the rest of the nodes with priority less than ten. In such cases we will have a new primary selected when the first candidate goes down. As soon as the former primary joins the replica set and sync up, it will hold election and become new primary as a result of the highest priority setting.

MongoDB replica set[/caption]

If you wondering why the setup has a hidden node, it is only there for taking nightly back ups. Hidden nodes are not visible to the driver and the application will not send queries to these nodes. This setup is the same as the four node replica set from an application point of view.

For the purposes of this demo, I have created the same replica set on my localhost by spinning up four mongod processes running on a different port. The output of rs.status() is shown below: [code language="javascript"] replica1:PRIMARY> rs.status() { "set" : "replica1", "date" : ISODate("2018-05-28T11:22:25.154Z"), "myState" : 1, "term" : NumberLong(1), "heartbeatIntervalMillis" : NumberLong(2000), "members" : [ { "_id" : 0, "name" : "localhost:37017", "health" : 1, "state" : 1, "stateStr" : "PRIMARY", "uptime" : 127, "optime" : { "ts" : Timestamp(1527506444, 2), "t" : NumberLong(1) }, "optimeDate" : ISODate("2018-05-28T11:20:44Z"), "infoMessage" : "could not find member to sync from", "electionTime" : Timestamp(1527506444, 1), "electionDate" : ISODate("2018-05-28T11:20:44Z"), "configVersion" : 1, "self" : true }, { "_id" : 1, "name" : "localhost:37018", "health" : 1, "state" : 2, "stateStr" : "SECONDARY", "uptime" : 111, "optime" : { "ts" : Timestamp(1527506444, 2), "t" : NumberLong(1) }, "optimeDate" : ISODate("2018-05-28T11:20:44Z"), "lastHeartbeat" : ISODate("2018-05-28T11:22:24.521Z"), "lastHeartbeatRecv" : ISODate("2018-05-28T11:22:23.977Z"), "pingMs" : NumberLong(0), "syncingTo" : "localhost:37017", "configVersion" : 1 }, { "_id" : 2, "name" : "localhost:37019", "health" : 1, "state" : 2, "stateStr" : "SECONDARY", "uptime" : 111, "optime" : { "ts" : Timestamp(1527506444, 2), "t" : NumberLong(1) }, "optimeDate" : ISODate("2018-05-28T11:20:44Z"), "lastHeartbeat" : ISODate("2018-05-28T11:22:24.521Z"), "lastHeartbeatRecv" : ISODate("2018-05-28T11:22:24.070Z"), "pingMs" : NumberLong(0), "syncingTo" : "localhost:37017", "configVersion" : 1 }, { "_id" : 3, "name" : "localhost:37020", "health" : 1, "state" : 2, "stateStr" : "SECONDARY", "uptime" : 111, "optime" : { "ts" : Timestamp(1527506444, 2), "t" : NumberLong(1) }, "optimeDate" : ISODate("2018-05-28T11:20:44Z"), "lastHeartbeat" : ISODate("2018-05-28T11:22:24.521Z"), "lastHeartbeatRecv" : ISODate("2018-05-28T11:22:24.065Z"), "pingMs" : NumberLong(0), "syncingTo" : "localhost:37017", "configVersion" : 1 } ], "ok" : 1 } replica1:PRIMARY> [/code]

So, on first look everything seems to be fine. There is one Primary node and there are three Secondary nodes. We know that one of the secondaries is hidden and should have votes 0. Let’s take a closer look and the rs.conf() output. [code language="javascript"] replica1:PRIMARY> rs.conf() { "_id" : "replica1", "version" : 2, "protocolVersion" : NumberLong(1), "members" : [ { "_id" : 0, "host" : "localhost:37017", "arbiterOnly" : false, "buildIndexes" : true, "hidden" : false, "priority" : 1, "tags" : { }, "slaveDelay" : NumberLong(0), "votes" : 1 }, { "_id" : 1, "host" : "localhost:37018", "arbiterOnly" : false, "buildIndexes" : true, "hidden" : false, "priority" : 0, "tags" : { }, "slaveDelay" : NumberLong(0), "votes" : 1 }, { "_id" : 2, "host" : "localhost:37019", "arbiterOnly" : false, "buildIndexes" : true, "hidden" : false, "priority" : 0, "tags" : { }, "slaveDelay" : NumberLong(0), "votes" : 1 }, { "_id" : 3, "host" : "localhost:37020", "arbiterOnly" : false, "buildIndexes" : true, "hidden" : true, "priority" : 0, "tags" : { }, "slaveDelay" : NumberLong(0), "votes" : 0 } ], "settings" : { "chainingAllowed" : true, "heartbeatIntervalMillis" : 2000, "heartbeatTimeoutSecs" : 10, "electionTimeoutMillis" : 10000, "getLastErrorModes" : { }, "getLastErrorDefaults" : { "w" : 1, "wtimeout" : 0 }, "replicaSetId" : ObjectId("5b0be6019cb045df012e2601") } } replica1:PRIMARY> [/code]

The first three nodes have votes:1, hidden:false, but there is one additional option

priority

. As you may notice, only one node has priority:1, all other nodes are running with priority:0. What is priority in a replica set? From the MongoDB

documentation

, priority is a

number that indicates the relative eligibility of a member to become a primary. What does this mean in our case? Having the replica set running with priority:1 on only one node you'll end up with only that node being eligible to become the primary. If this node fails, no other node will initiate election because the priority is set to 0. Let’s demonstrate this by killing the process for the primary mongod running on port 37017. We will kill the process 7200 and wait the replica set to elect new primary. [code language="bash"] root@control:/opt/mongodb# ps aux | grep mongo root 7200 1.5 4.7 706048 97416 ? Sl 13:20 0:14 /opt/mongodb/bin/mongod -f /opt/mongodb/rs11.conf root 7216 1.4 2.9 688584 60516 ? Sl 13:20 0:13 /opt/mongodb/bin/mongod -f /opt/mongodb/rs12.conf root 7242 1.4 2.8 689608 58180 ? Sl 13:20 0:14 /opt/mongodb/bin/mongod -f /opt/mongodb/rs13.conf root 7268 1.4 2.7 689864 56208 ? Sl 13:20 0:13 /opt/mongodb/bin/mongod -f /opt/mongodb/rs14.conf root@control:/opt/mongodb# kill 7200 root@control:/opt/mongodb# ps aux | grep mongo root 7216 1.4 2.9 688584 60516 ? Sl 13:20 0:13 /opt/mongodb/bin/mongod -f /opt/mongodb/rs12.conf root 7242 1.4 2.8 689608 58180 ? Sl 13:20 0:14 /opt/mongodb/bin/mongod -f /opt/mongodb/rs13.conf root 7268 1.4 2.7 689864 56208 ? Sl 13:20 0:13 /opt/mongodb/bin/mongod -f /opt/mongodb/rs14.conf [/code]

Let's check the rs.status() output again. We are expecting the process running on port 37017 to be down, but all other processes are up. [code language="javascript"] replica1:SECONDARY> rs.status() { "set" : "replica1", "date" : ISODate("2018-05-28T11:40:42.038Z"), "myState" : 2, "term" : NumberLong(1), "heartbeatIntervalMillis" : NumberLong(2000), "members" : [ { "_id" : 0, "name" : "localhost:37017", "health" : 0, "state" : 8, "stateStr" : "(not reachable/healthy)", "uptime" : 0, "optime" : { "ts" : Timestamp(0, 0), "t" : NumberLong(-1) }, "optimeDate" : ISODate("1970-01-01T00:00:00Z"), "lastHeartbeat" : ISODate("2018-05-28T11:40:37.241Z"), "lastHeartbeatRecv" : ISODate("2018-05-28T11:39:20.134Z"), "pingMs" : NumberLong(0), "lastHeartbeatMessage" : "Connection refused", "configVersion" : -1 }, { "_id" : 1, "name" : "localhost:37018", "health" : 1, "state" : 2, "stateStr" : "SECONDARY", "uptime" : 1224, "optime" : { "ts" : Timestamp(1527506674, 1), "t" : NumberLong(1) }, "optimeDate" : ISODate("2018-05-28T11:24:34Z"), "infoMessage" : "could not find member to sync from", "configVersion" : 2, "self" : true }, { "_id" : 2, "name" : "localhost:37019", "health" : 1, "state" : 2, "stateStr" : "SECONDARY", "uptime" : 1206, "optime" : { "ts" : Timestamp(1527506674, 1), "t" : NumberLong(1) }, "optimeDate" : ISODate("2018-05-28T11:24:34Z"), "lastHeartbeat" : ISODate("2018-05-28T11:40:37.290Z"), "lastHeartbeatRecv" : ISODate("2018-05-28T11:40:37.264Z"), "pingMs" : NumberLong(0), "configVersion" : 2 }, { "_id" : 3, "name" : "localhost:37020", "health" : 1, "state" : 2, "stateStr" : "SECONDARY", "uptime" : 1206, "optime" : { "ts" : Timestamp(1527506674, 1), "t" : NumberLong(1) }, "optimeDate" : ISODate("2018-05-28T11:24:34Z"), "lastHeartbeat" : ISODate("2018-05-28T11:40:37.290Z"), "lastHeartbeatRecv" : ISODate("2018-05-28T11:40:37.289Z"), "pingMs" : NumberLong(4), "configVersion" : 2 } ], "ok" : 1 } replica1:SECONDARY> [/code]

The status of the node running on port 37017 is not reachable/healthy and no other node is elected as primary. You may expect the replica will elect a new primary as there are two other healthy secondary nodes (excluding the hidden node), but this is not the case. Having the secondary nodes with priority : 0 will force no other node to be eligible as primary. How to fix this?

We will have to reconfigure the replica set to have more than one node with priority greater than zero. I will leave this as your exercise (add your comment below if you need help), but will only mention that if we want to select a particular node to always be the primary, we can set the priority to ten and have the rest of the nodes with priority less than ten. In such cases we will have a new primary selected when the first candidate goes down. As soon as the former primary joins the replica set and sync up, it will hold election and become new primary as a result of the highest priority setting.

Find out how Pythian can help you with all of your MongoDB needs.

MongoDB replica set[/caption]

If you wondering why the setup has a hidden node, it is only there for taking nightly back ups. Hidden nodes are not visible to the driver and the application will not send queries to these nodes. This setup is the same as the four node replica set from an application point of view.

For the purposes of this demo, I have created the same replica set on my localhost by spinning up four mongod processes running on a different port. The output of rs.status() is shown below: [code language="javascript"] replica1:PRIMARY> rs.status() { "set" : "replica1", "date" : ISODate("2018-05-28T11:22:25.154Z"), "myState" : 1, "term" : NumberLong(1), "heartbeatIntervalMillis" : NumberLong(2000), "members" : [ { "_id" : 0, "name" : "localhost:37017", "health" : 1, "state" : 1, "stateStr" : "PRIMARY", "uptime" : 127, "optime" : { "ts" : Timestamp(1527506444, 2), "t" : NumberLong(1) }, "optimeDate" : ISODate("2018-05-28T11:20:44Z"), "infoMessage" : "could not find member to sync from", "electionTime" : Timestamp(1527506444, 1), "electionDate" : ISODate("2018-05-28T11:20:44Z"), "configVersion" : 1, "self" : true }, { "_id" : 1, "name" : "localhost:37018", "health" : 1, "state" : 2, "stateStr" : "SECONDARY", "uptime" : 111, "optime" : { "ts" : Timestamp(1527506444, 2), "t" : NumberLong(1) }, "optimeDate" : ISODate("2018-05-28T11:20:44Z"), "lastHeartbeat" : ISODate("2018-05-28T11:22:24.521Z"), "lastHeartbeatRecv" : ISODate("2018-05-28T11:22:23.977Z"), "pingMs" : NumberLong(0), "syncingTo" : "localhost:37017", "configVersion" : 1 }, { "_id" : 2, "name" : "localhost:37019", "health" : 1, "state" : 2, "stateStr" : "SECONDARY", "uptime" : 111, "optime" : { "ts" : Timestamp(1527506444, 2), "t" : NumberLong(1) }, "optimeDate" : ISODate("2018-05-28T11:20:44Z"), "lastHeartbeat" : ISODate("2018-05-28T11:22:24.521Z"), "lastHeartbeatRecv" : ISODate("2018-05-28T11:22:24.070Z"), "pingMs" : NumberLong(0), "syncingTo" : "localhost:37017", "configVersion" : 1 }, { "_id" : 3, "name" : "localhost:37020", "health" : 1, "state" : 2, "stateStr" : "SECONDARY", "uptime" : 111, "optime" : { "ts" : Timestamp(1527506444, 2), "t" : NumberLong(1) }, "optimeDate" : ISODate("2018-05-28T11:20:44Z"), "lastHeartbeat" : ISODate("2018-05-28T11:22:24.521Z"), "lastHeartbeatRecv" : ISODate("2018-05-28T11:22:24.065Z"), "pingMs" : NumberLong(0), "syncingTo" : "localhost:37017", "configVersion" : 1 } ], "ok" : 1 } replica1:PRIMARY> [/code]

So, on first look everything seems to be fine. There is one Primary node and there are three Secondary nodes. We know that one of the secondaries is hidden and should have votes 0. Let’s take a closer look and the rs.conf() output. [code language="javascript"] replica1:PRIMARY> rs.conf() { "_id" : "replica1", "version" : 2, "protocolVersion" : NumberLong(1), "members" : [ { "_id" : 0, "host" : "localhost:37017", "arbiterOnly" : false, "buildIndexes" : true, "hidden" : false, "priority" : 1, "tags" : { }, "slaveDelay" : NumberLong(0), "votes" : 1 }, { "_id" : 1, "host" : "localhost:37018", "arbiterOnly" : false, "buildIndexes" : true, "hidden" : false, "priority" : 0, "tags" : { }, "slaveDelay" : NumberLong(0), "votes" : 1 }, { "_id" : 2, "host" : "localhost:37019", "arbiterOnly" : false, "buildIndexes" : true, "hidden" : false, "priority" : 0, "tags" : { }, "slaveDelay" : NumberLong(0), "votes" : 1 }, { "_id" : 3, "host" : "localhost:37020", "arbiterOnly" : false, "buildIndexes" : true, "hidden" : true, "priority" : 0, "tags" : { }, "slaveDelay" : NumberLong(0), "votes" : 0 } ], "settings" : { "chainingAllowed" : true, "heartbeatIntervalMillis" : 2000, "heartbeatTimeoutSecs" : 10, "electionTimeoutMillis" : 10000, "getLastErrorModes" : { }, "getLastErrorDefaults" : { "w" : 1, "wtimeout" : 0 }, "replicaSetId" : ObjectId("5b0be6019cb045df012e2601") } } replica1:PRIMARY> [/code]

The first three nodes have votes:1, hidden:false, but there is one additional option

priority

. As you may notice, only one node has priority:1, all other nodes are running with priority:0. What is priority in a replica set? From the MongoDB

documentation

, priority is a

number that indicates the relative eligibility of a member to become a primary. What does this mean in our case? Having the replica set running with priority:1 on only one node you'll end up with only that node being eligible to become the primary. If this node fails, no other node will initiate election because the priority is set to 0. Let’s demonstrate this by killing the process for the primary mongod running on port 37017. We will kill the process 7200 and wait the replica set to elect new primary. [code language="bash"] root@control:/opt/mongodb# ps aux | grep mongo root 7200 1.5 4.7 706048 97416 ? Sl 13:20 0:14 /opt/mongodb/bin/mongod -f /opt/mongodb/rs11.conf root 7216 1.4 2.9 688584 60516 ? Sl 13:20 0:13 /opt/mongodb/bin/mongod -f /opt/mongodb/rs12.conf root 7242 1.4 2.8 689608 58180 ? Sl 13:20 0:14 /opt/mongodb/bin/mongod -f /opt/mongodb/rs13.conf root 7268 1.4 2.7 689864 56208 ? Sl 13:20 0:13 /opt/mongodb/bin/mongod -f /opt/mongodb/rs14.conf root@control:/opt/mongodb# kill 7200 root@control:/opt/mongodb# ps aux | grep mongo root 7216 1.4 2.9 688584 60516 ? Sl 13:20 0:13 /opt/mongodb/bin/mongod -f /opt/mongodb/rs12.conf root 7242 1.4 2.8 689608 58180 ? Sl 13:20 0:14 /opt/mongodb/bin/mongod -f /opt/mongodb/rs13.conf root 7268 1.4 2.7 689864 56208 ? Sl 13:20 0:13 /opt/mongodb/bin/mongod -f /opt/mongodb/rs14.conf [/code]

Let's check the rs.status() output again. We are expecting the process running on port 37017 to be down, but all other processes are up. [code language="javascript"] replica1:SECONDARY> rs.status() { "set" : "replica1", "date" : ISODate("2018-05-28T11:40:42.038Z"), "myState" : 2, "term" : NumberLong(1), "heartbeatIntervalMillis" : NumberLong(2000), "members" : [ { "_id" : 0, "name" : "localhost:37017", "health" : 0, "state" : 8, "stateStr" : "(not reachable/healthy)", "uptime" : 0, "optime" : { "ts" : Timestamp(0, 0), "t" : NumberLong(-1) }, "optimeDate" : ISODate("1970-01-01T00:00:00Z"), "lastHeartbeat" : ISODate("2018-05-28T11:40:37.241Z"), "lastHeartbeatRecv" : ISODate("2018-05-28T11:39:20.134Z"), "pingMs" : NumberLong(0), "lastHeartbeatMessage" : "Connection refused", "configVersion" : -1 }, { "_id" : 1, "name" : "localhost:37018", "health" : 1, "state" : 2, "stateStr" : "SECONDARY", "uptime" : 1224, "optime" : { "ts" : Timestamp(1527506674, 1), "t" : NumberLong(1) }, "optimeDate" : ISODate("2018-05-28T11:24:34Z"), "infoMessage" : "could not find member to sync from", "configVersion" : 2, "self" : true }, { "_id" : 2, "name" : "localhost:37019", "health" : 1, "state" : 2, "stateStr" : "SECONDARY", "uptime" : 1206, "optime" : { "ts" : Timestamp(1527506674, 1), "t" : NumberLong(1) }, "optimeDate" : ISODate("2018-05-28T11:24:34Z"), "lastHeartbeat" : ISODate("2018-05-28T11:40:37.290Z"), "lastHeartbeatRecv" : ISODate("2018-05-28T11:40:37.264Z"), "pingMs" : NumberLong(0), "configVersion" : 2 }, { "_id" : 3, "name" : "localhost:37020", "health" : 1, "state" : 2, "stateStr" : "SECONDARY", "uptime" : 1206, "optime" : { "ts" : Timestamp(1527506674, 1), "t" : NumberLong(1) }, "optimeDate" : ISODate("2018-05-28T11:24:34Z"), "lastHeartbeat" : ISODate("2018-05-28T11:40:37.290Z"), "lastHeartbeatRecv" : ISODate("2018-05-28T11:40:37.289Z"), "pingMs" : NumberLong(4), "configVersion" : 2 } ], "ok" : 1 } replica1:SECONDARY> [/code]

The status of the node running on port 37017 is not reachable/healthy and no other node is elected as primary. You may expect the replica will elect a new primary as there are two other healthy secondary nodes (excluding the hidden node), but this is not the case. Having the secondary nodes with priority : 0 will force no other node to be eligible as primary. How to fix this?

We will have to reconfigure the replica set to have more than one node with priority greater than zero. I will leave this as your exercise (add your comment below if you need help), but will only mention that if we want to select a particular node to always be the primary, we can set the priority to ten and have the rest of the nodes with priority less than ten. In such cases we will have a new primary selected when the first candidate goes down. As soon as the former primary joins the replica set and sync up, it will hold election and become new primary as a result of the highest priority setting.

MongoDB replica set[/caption]

If you wondering why the setup has a hidden node, it is only there for taking nightly back ups. Hidden nodes are not visible to the driver and the application will not send queries to these nodes. This setup is the same as the four node replica set from an application point of view.

For the purposes of this demo, I have created the same replica set on my localhost by spinning up four mongod processes running on a different port. The output of rs.status() is shown below: [code language="javascript"] replica1:PRIMARY> rs.status() { "set" : "replica1", "date" : ISODate("2018-05-28T11:22:25.154Z"), "myState" : 1, "term" : NumberLong(1), "heartbeatIntervalMillis" : NumberLong(2000), "members" : [ { "_id" : 0, "name" : "localhost:37017", "health" : 1, "state" : 1, "stateStr" : "PRIMARY", "uptime" : 127, "optime" : { "ts" : Timestamp(1527506444, 2), "t" : NumberLong(1) }, "optimeDate" : ISODate("2018-05-28T11:20:44Z"), "infoMessage" : "could not find member to sync from", "electionTime" : Timestamp(1527506444, 1), "electionDate" : ISODate("2018-05-28T11:20:44Z"), "configVersion" : 1, "self" : true }, { "_id" : 1, "name" : "localhost:37018", "health" : 1, "state" : 2, "stateStr" : "SECONDARY", "uptime" : 111, "optime" : { "ts" : Timestamp(1527506444, 2), "t" : NumberLong(1) }, "optimeDate" : ISODate("2018-05-28T11:20:44Z"), "lastHeartbeat" : ISODate("2018-05-28T11:22:24.521Z"), "lastHeartbeatRecv" : ISODate("2018-05-28T11:22:23.977Z"), "pingMs" : NumberLong(0), "syncingTo" : "localhost:37017", "configVersion" : 1 }, { "_id" : 2, "name" : "localhost:37019", "health" : 1, "state" : 2, "stateStr" : "SECONDARY", "uptime" : 111, "optime" : { "ts" : Timestamp(1527506444, 2), "t" : NumberLong(1) }, "optimeDate" : ISODate("2018-05-28T11:20:44Z"), "lastHeartbeat" : ISODate("2018-05-28T11:22:24.521Z"), "lastHeartbeatRecv" : ISODate("2018-05-28T11:22:24.070Z"), "pingMs" : NumberLong(0), "syncingTo" : "localhost:37017", "configVersion" : 1 }, { "_id" : 3, "name" : "localhost:37020", "health" : 1, "state" : 2, "stateStr" : "SECONDARY", "uptime" : 111, "optime" : { "ts" : Timestamp(1527506444, 2), "t" : NumberLong(1) }, "optimeDate" : ISODate("2018-05-28T11:20:44Z"), "lastHeartbeat" : ISODate("2018-05-28T11:22:24.521Z"), "lastHeartbeatRecv" : ISODate("2018-05-28T11:22:24.065Z"), "pingMs" : NumberLong(0), "syncingTo" : "localhost:37017", "configVersion" : 1 } ], "ok" : 1 } replica1:PRIMARY> [/code]

So, on first look everything seems to be fine. There is one Primary node and there are three Secondary nodes. We know that one of the secondaries is hidden and should have votes 0. Let’s take a closer look and the rs.conf() output. [code language="javascript"] replica1:PRIMARY> rs.conf() { "_id" : "replica1", "version" : 2, "protocolVersion" : NumberLong(1), "members" : [ { "_id" : 0, "host" : "localhost:37017", "arbiterOnly" : false, "buildIndexes" : true, "hidden" : false, "priority" : 1, "tags" : { }, "slaveDelay" : NumberLong(0), "votes" : 1 }, { "_id" : 1, "host" : "localhost:37018", "arbiterOnly" : false, "buildIndexes" : true, "hidden" : false, "priority" : 0, "tags" : { }, "slaveDelay" : NumberLong(0), "votes" : 1 }, { "_id" : 2, "host" : "localhost:37019", "arbiterOnly" : false, "buildIndexes" : true, "hidden" : false, "priority" : 0, "tags" : { }, "slaveDelay" : NumberLong(0), "votes" : 1 }, { "_id" : 3, "host" : "localhost:37020", "arbiterOnly" : false, "buildIndexes" : true, "hidden" : true, "priority" : 0, "tags" : { }, "slaveDelay" : NumberLong(0), "votes" : 0 } ], "settings" : { "chainingAllowed" : true, "heartbeatIntervalMillis" : 2000, "heartbeatTimeoutSecs" : 10, "electionTimeoutMillis" : 10000, "getLastErrorModes" : { }, "getLastErrorDefaults" : { "w" : 1, "wtimeout" : 0 }, "replicaSetId" : ObjectId("5b0be6019cb045df012e2601") } } replica1:PRIMARY> [/code]

The first three nodes have votes:1, hidden:false, but there is one additional option

priority

. As you may notice, only one node has priority:1, all other nodes are running with priority:0. What is priority in a replica set? From the MongoDB

documentation

, priority is a

number that indicates the relative eligibility of a member to become a primary. What does this mean in our case? Having the replica set running with priority:1 on only one node you'll end up with only that node being eligible to become the primary. If this node fails, no other node will initiate election because the priority is set to 0. Let’s demonstrate this by killing the process for the primary mongod running on port 37017. We will kill the process 7200 and wait the replica set to elect new primary. [code language="bash"] root@control:/opt/mongodb# ps aux | grep mongo root 7200 1.5 4.7 706048 97416 ? Sl 13:20 0:14 /opt/mongodb/bin/mongod -f /opt/mongodb/rs11.conf root 7216 1.4 2.9 688584 60516 ? Sl 13:20 0:13 /opt/mongodb/bin/mongod -f /opt/mongodb/rs12.conf root 7242 1.4 2.8 689608 58180 ? Sl 13:20 0:14 /opt/mongodb/bin/mongod -f /opt/mongodb/rs13.conf root 7268 1.4 2.7 689864 56208 ? Sl 13:20 0:13 /opt/mongodb/bin/mongod -f /opt/mongodb/rs14.conf root@control:/opt/mongodb# kill 7200 root@control:/opt/mongodb# ps aux | grep mongo root 7216 1.4 2.9 688584 60516 ? Sl 13:20 0:13 /opt/mongodb/bin/mongod -f /opt/mongodb/rs12.conf root 7242 1.4 2.8 689608 58180 ? Sl 13:20 0:14 /opt/mongodb/bin/mongod -f /opt/mongodb/rs13.conf root 7268 1.4 2.7 689864 56208 ? Sl 13:20 0:13 /opt/mongodb/bin/mongod -f /opt/mongodb/rs14.conf [/code]

Let's check the rs.status() output again. We are expecting the process running on port 37017 to be down, but all other processes are up. [code language="javascript"] replica1:SECONDARY> rs.status() { "set" : "replica1", "date" : ISODate("2018-05-28T11:40:42.038Z"), "myState" : 2, "term" : NumberLong(1), "heartbeatIntervalMillis" : NumberLong(2000), "members" : [ { "_id" : 0, "name" : "localhost:37017", "health" : 0, "state" : 8, "stateStr" : "(not reachable/healthy)", "uptime" : 0, "optime" : { "ts" : Timestamp(0, 0), "t" : NumberLong(-1) }, "optimeDate" : ISODate("1970-01-01T00:00:00Z"), "lastHeartbeat" : ISODate("2018-05-28T11:40:37.241Z"), "lastHeartbeatRecv" : ISODate("2018-05-28T11:39:20.134Z"), "pingMs" : NumberLong(0), "lastHeartbeatMessage" : "Connection refused", "configVersion" : -1 }, { "_id" : 1, "name" : "localhost:37018", "health" : 1, "state" : 2, "stateStr" : "SECONDARY", "uptime" : 1224, "optime" : { "ts" : Timestamp(1527506674, 1), "t" : NumberLong(1) }, "optimeDate" : ISODate("2018-05-28T11:24:34Z"), "infoMessage" : "could not find member to sync from", "configVersion" : 2, "self" : true }, { "_id" : 2, "name" : "localhost:37019", "health" : 1, "state" : 2, "stateStr" : "SECONDARY", "uptime" : 1206, "optime" : { "ts" : Timestamp(1527506674, 1), "t" : NumberLong(1) }, "optimeDate" : ISODate("2018-05-28T11:24:34Z"), "lastHeartbeat" : ISODate("2018-05-28T11:40:37.290Z"), "lastHeartbeatRecv" : ISODate("2018-05-28T11:40:37.264Z"), "pingMs" : NumberLong(0), "configVersion" : 2 }, { "_id" : 3, "name" : "localhost:37020", "health" : 1, "state" : 2, "stateStr" : "SECONDARY", "uptime" : 1206, "optime" : { "ts" : Timestamp(1527506674, 1), "t" : NumberLong(1) }, "optimeDate" : ISODate("2018-05-28T11:24:34Z"), "lastHeartbeat" : ISODate("2018-05-28T11:40:37.290Z"), "lastHeartbeatRecv" : ISODate("2018-05-28T11:40:37.289Z"), "pingMs" : NumberLong(4), "configVersion" : 2 } ], "ok" : 1 } replica1:SECONDARY> [/code]

The status of the node running on port 37017 is not reachable/healthy and no other node is elected as primary. You may expect the replica will elect a new primary as there are two other healthy secondary nodes (excluding the hidden node), but this is not the case. Having the secondary nodes with priority : 0 will force no other node to be eligible as primary. How to fix this?

We will have to reconfigure the replica set to have more than one node with priority greater than zero. I will leave this as your exercise (add your comment below if you need help), but will only mention that if we want to select a particular node to always be the primary, we can set the priority to ten and have the rest of the nodes with priority less than ten. In such cases we will have a new primary selected when the first candidate goes down. As soon as the former primary joins the replica set and sync up, it will hold election and become new primary as a result of the highest priority setting.

Conclusion

Replica sets provide redundancy and high availability. The failover with MongoDB replica set is automatic, but there must be an eligible secondary member. Running a replica set with priority:1 on only one node is dangerous and may cause downtime. Sometimes it’s hard to figure out why the replica set does not elect a new primary even when there are healthy members as secondary nodes. As much as the configuration options in a replica set provide flexibility for different setups, you should always be cautious.Find out how Pythian can help you with all of your MongoDB needs.